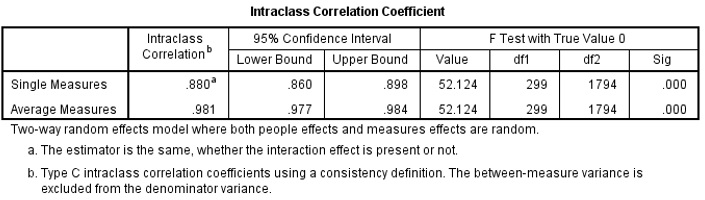

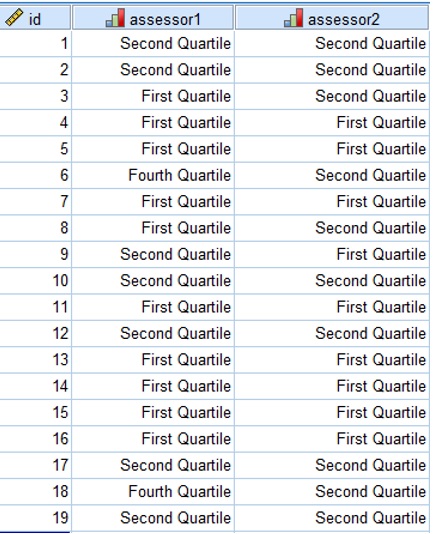

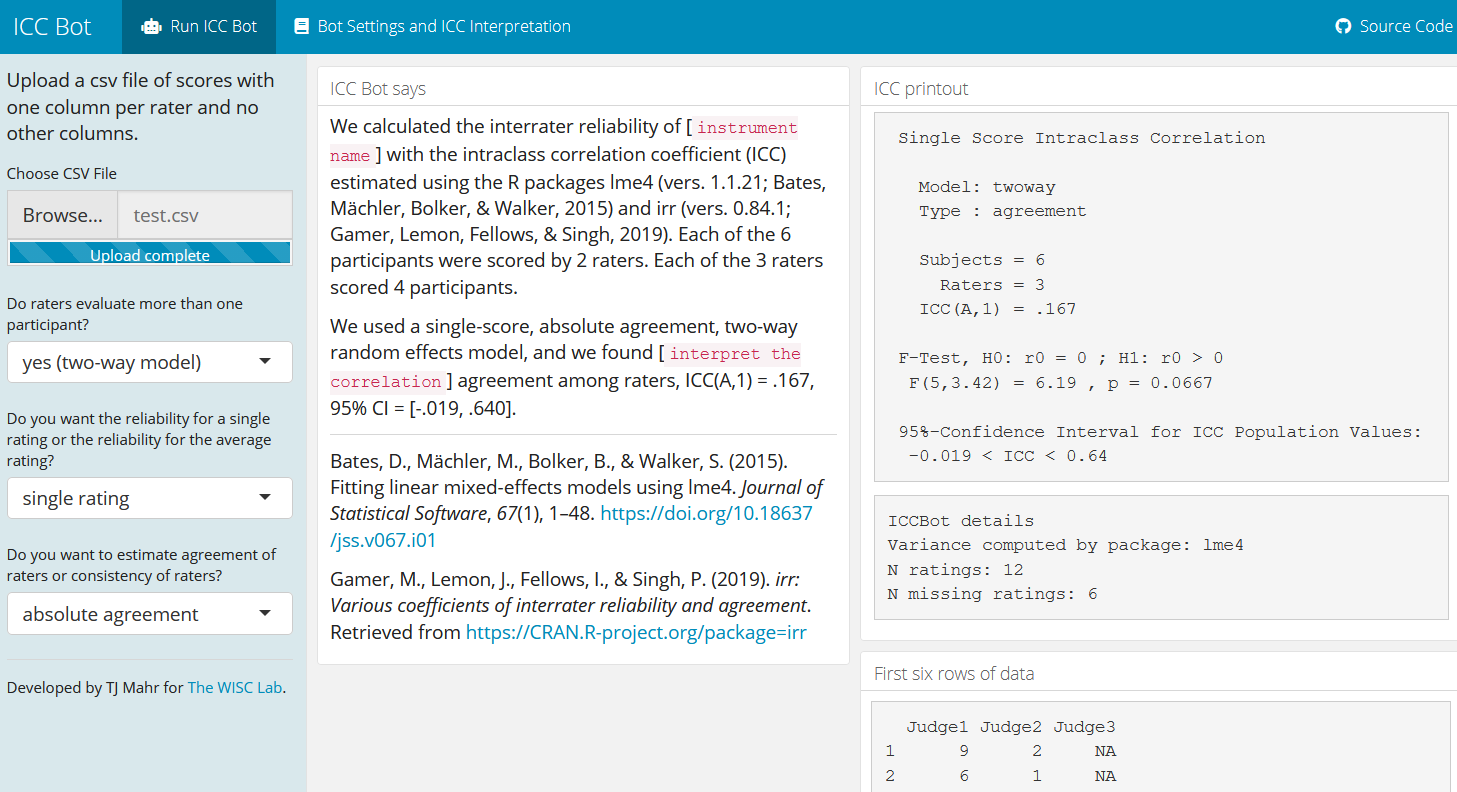

Test–retest reliability of the Cost for Patients Questionnaire | International Journal of Technology Assessment in Health Care | Cambridge Core

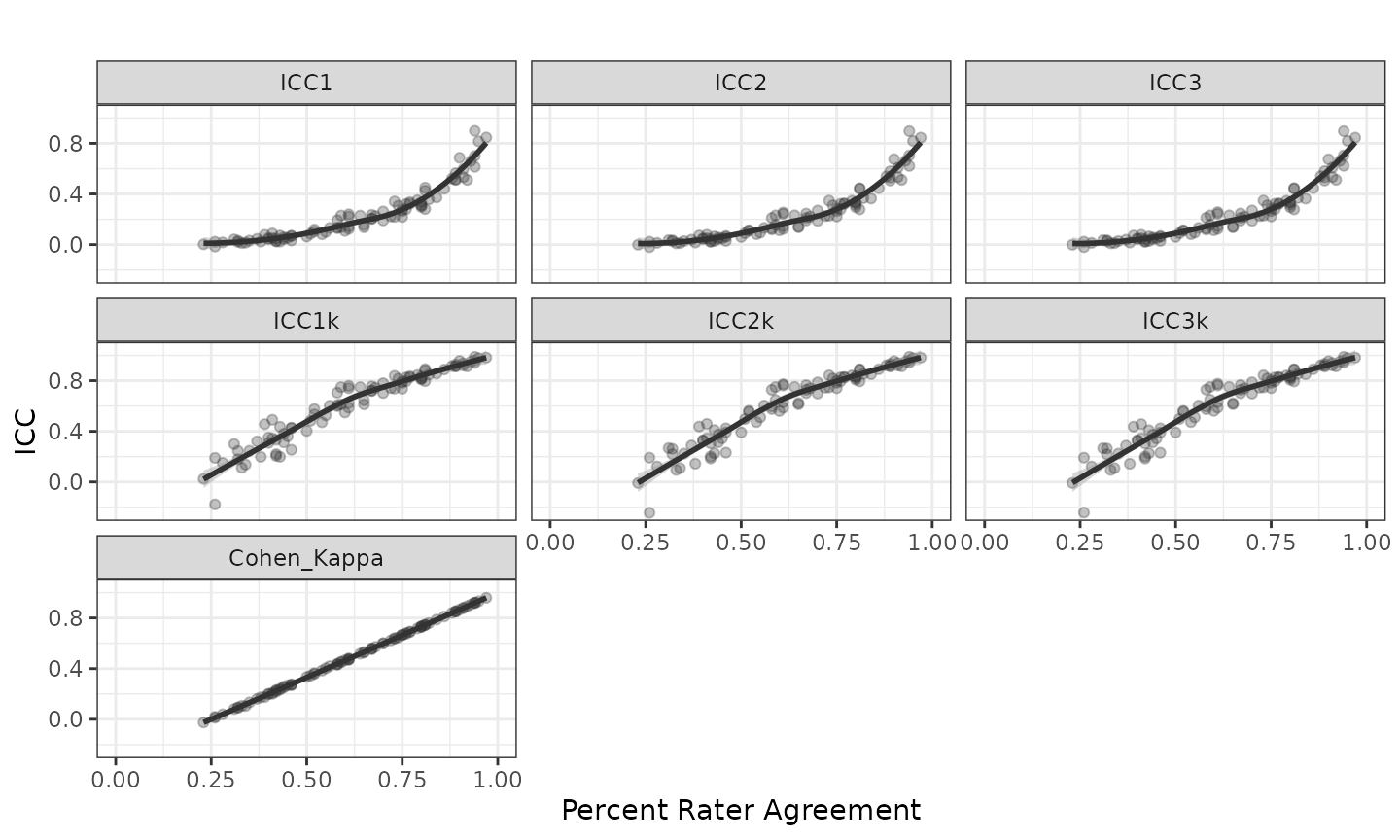

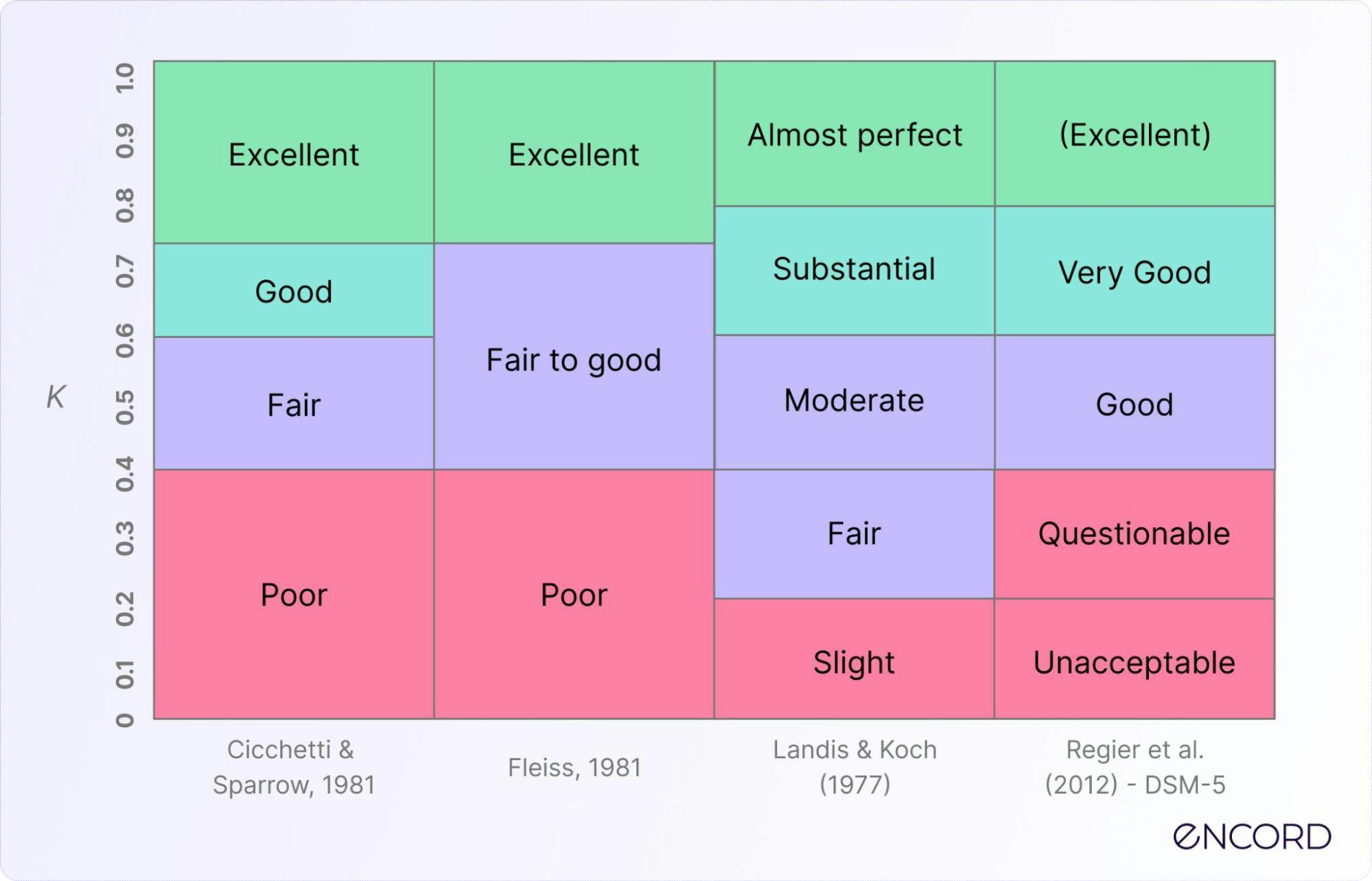

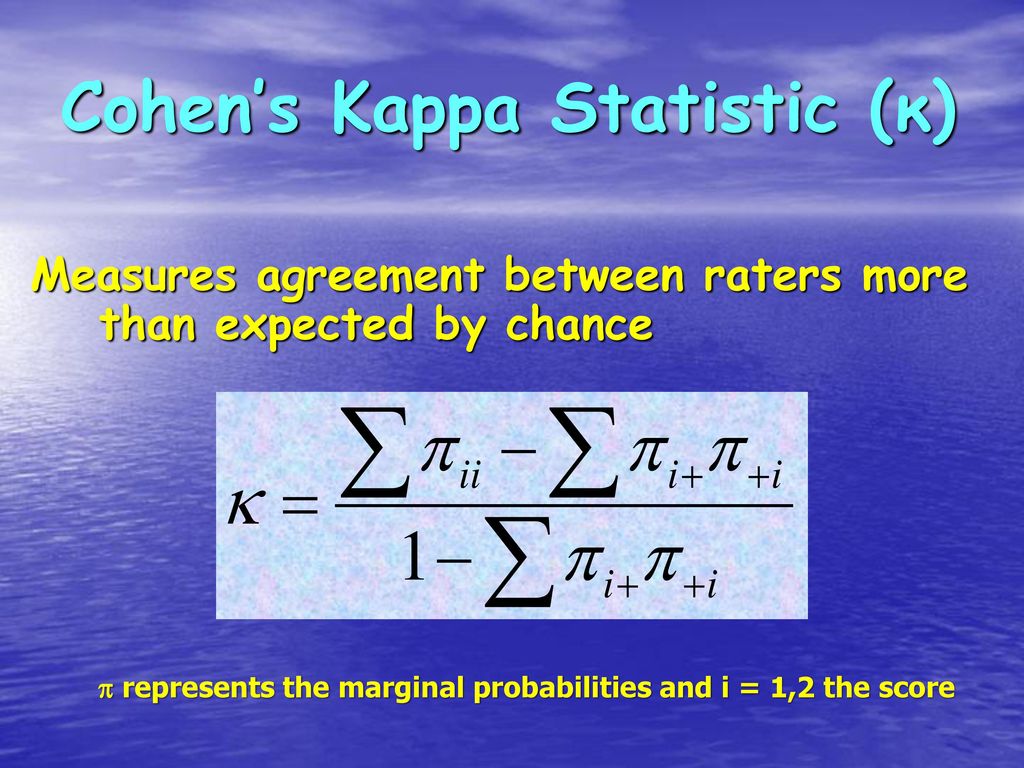

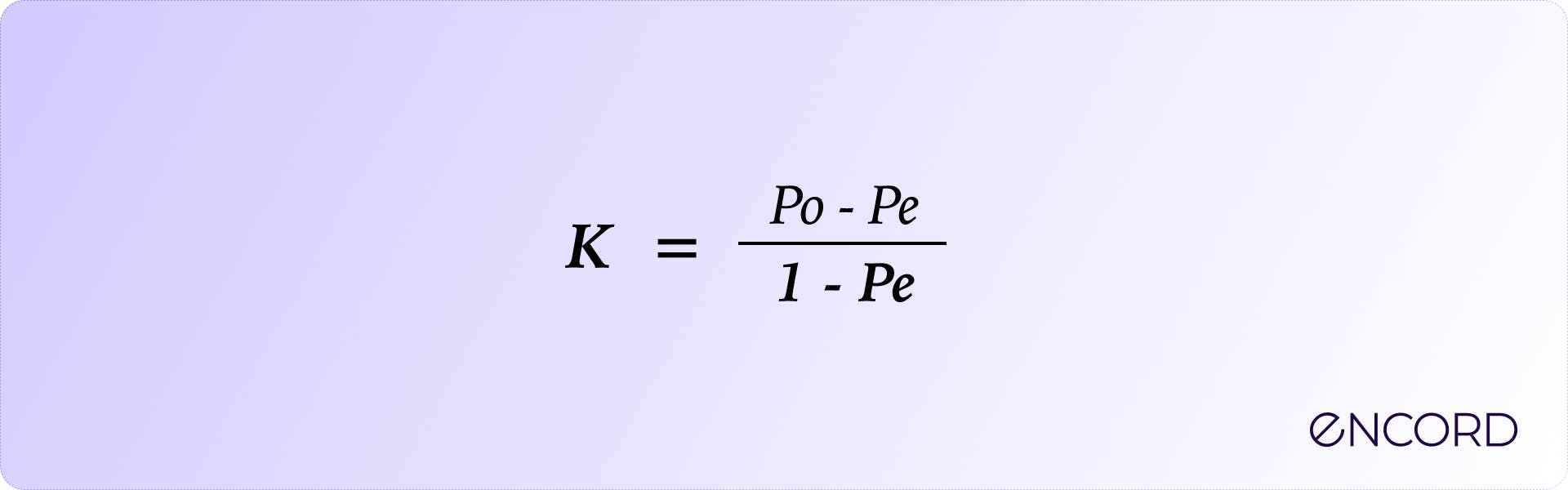

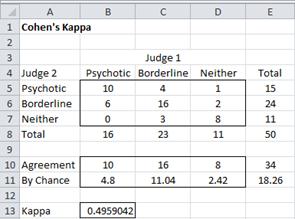

of results (percent agreement). Cohen's kappa statistic (κ) - degrees... | Download Scientific Diagram